Combinatorial optimization based on stochastic relaxation

| Title: | Combinatorial optimization based on stochastic relaxation |

Image:Stochastic.jpg |

| Description: | The project will focus on the study, implementation, comparison and

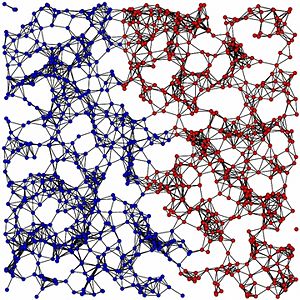

analysis of different algorithms for the optimization of pseudo-Boolean functions, i.e., functions defined over binary variables with values in R. These functions have been studied a lot in the mathematical programming literature, and different algorithms have been proposed (1). More recently, the same problems have been faced in evolutionary computations, with the use of genetic algorithms, and in particular estimation of distribution algorithms (2,3). Estimation of distribution algorithms are a recent meta-heuristic, where classical crossover and mutation operators used in genetic algorithms are replaced with operators that come from statistics, such as sampling and estimation. The focus will be on the implementation of a new algorithm able to combine different approaches (estimation and sampling, from one side, and exploitation of prior knowledge about the structure of problem, on the other), together with the comparison of the results with existing techniques that historically appear in different (and often separated) communities. Good coding (C/C++) abilities are required. Since the approach will be based on statistical models, the student is supposed to be comfortable with notions that come from probability and statistics courses. The project could require some extra effort in order to build and consolidate some background in math, especially in Bayesian statistics and MCMC techniques, such as Gibbs and Metropolis samplers (4). The project can be extended to master thesis, according to interesting and novel directions of research that will emerge in the first part of the work. Possible ideas may concern the proposal of new algorithms able to learn existing dependencies among the variables in the function to be optimized, and exploit them in order to increase the probability to converge to the global optimum. Picture taken from http://www.ra.cs.uni-tuebingen.de/ Bibliography

| |

| Tutor: | MatteoMatteucci (matteo.matteucci@polimi.it), LuigiMalago (malago@elet.polimi.it) | |

| Start: | 2009/10/01 | |

| Students: | 1 - 2 | |

| CFU: | 5 - 20 | |

| Research Area: | Machine Learning | |

| Research Topic: | Information Geometry, Stochastic Optimization | |

| Level: | Ms | |

| Type: | Course, Thesis | |

| Status: | Closed |