Difference between revisions of "Sound source localization for robots"

(→Existing systems) |

|||

| Line 34: | Line 34: | ||

==== ==== | ==== ==== | ||

| + | |||

| + | ==Pros and cons of existing approaches== | ||

| + | |||

| + | |||

==Essential bibliography== | ==Essential bibliography== | ||

Revision as of 11:38, 10 December 2015

| Title: | Sound source localization for robots | |

|---|---|---|

| Description: | Analysis of the state-of-the-art of binaural systems for audio detection and localization in robotics | |

| Tutor: | MatteoMatteucci | |

| Start: | 2015/10/08 | |

| Number of students: | 1 | |

| CFU: | 20 |

Analysis of the state-of-the-art of sound source localisation algorithms. Application and improvement of existing systems developping a binaural models to apply on a robot, initially based on a stereo acquisition, then using different type of sensors (microphone array, MEMS, etc...).

Contents

State-of-the-art

Psychoacousitcs measurements

Robotics audition and active perception of sound source are nowadays a hard task. The common starting point of different existing algorithm is the knowledge of some psychoacoustics measurement. These main features are ITD (Interaural Time Difference) and ILD (Interaural Level Difference), and they describe how humans can localize a sound source.

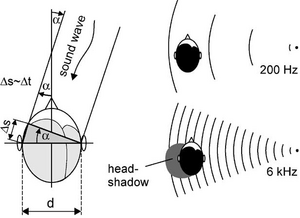

ITD measures the difference in time-of-arrival of a sound wave between the two ears (Its unity of measure is ms). It depends on the distance between the ears, the frequency content of the sound wave and the angle of incidence of the incoming sound. For geometric reasons, ITD gives useful measurements below the frequency threshold of 1500Hz. In some systems, it could be useful to make this analysis in frequency domani instead of time domain; the equivalent measure in this world is the IPD (Interaural Phase Differene).

ILD measures the difference in pressure level (dB) between the two ears. As for ITD, ILD depends on the frequency content of the sound wave, on the incident angle and on physical structure of the human perceiving. For these reasons, the most useful IPD measurements are at high frequency (many state-of-the-art system use the same threshold as ITD, 1500Hz).

ITD and ILD measurements are easy to be analyzed and understood, so they are used in many of the state-of-the-art algorithms where the localization is the main task. When the aim of the system is to analyze the environment around it or to go more in depth in the analysis of the sound source, ITD/ILD can't be enough. A more complex measurements used in this case is the HRIR/HRTF (Head Related Impulse Response/Head Related Transfer Function). This feature represent the acquiring object (for example: the human who's listening) as a filter, that depends on its geometry, on the environment around it, on the frequency content, on the source position on the space, ecc... The result achieved thanks to HRTF are more precise than using only ITD/ILD, but it requires a very much more complex acquiring and processing stage to allow the calculation fo this complex feature, more expensive instruments and the resuly becomes directly linked to the actual situation in which the experiment is done.

In few words, HRTF measurements gives better and stable results, but can't be enlarged for a general purpose system, because it strictly depends on the acquiring object, on human physiology and on the environment in which I found out the result. It's also computational expensive. ITD/ILD are easier features, less computational expensive, can be modified easely for any environment and in every situation, at a cost of a simpler model.

For our primary task, that is a simpler and stable source localization algorithm for robotics with no willing of emulating human perception system, working with ILD/ITD could be sufficient. If we would like to go in deepth with the analysis of human physiology, human perception or environment analysis, HRTF could be a better choice in future.

Existing systems

Here we will describes in few words the state-of-the-art

Nakashima, Hiromichi, and Toshiharu Mukai. "3D sound source localization system based on learning of binaural hearing." [1]

In this paper, Nakashima proposes a robot with two acquiring sensors (two microphones) and with 2 humanoid pinnas to achieve both horizontal and vertical detection and localization. He uses as features ITD for the azimuth and spectral features for the elevation. In this way, Nakashima creates a physical model, a sort of simplified version of an HRTF creating a transfer function between the incoming signal and the "humaniod" robot sensors composed only by ears and a quasi-spherical main corpus. The training set is done mapping the minimum of this mixed feature vector (composed by ITD and spectral content) feeding the system with white noise emitted from every azimuth and elevation position through a neural system.

Deleforge, Antoine, and Radu Horaud. "The cocktail party robot: Sound source separation and localisation with an active binaural head."[2]

In this work, Deleforge uses implicitly the HRTF jointly with ITD and IPD. The sound incoming is acquired using an active binaural robot, a system with a real human head shape that can move in horizontal and vertical planes (pan and tilt movements). The sound source is in a fixed point, instead the robot change its orientation, moving in pan and tilt. The utilization of a humanoid head make implicit that they are filtering the input signal via the HRTF of this robot. The main idea of the 2D separation and localization algorithm is a probabilistic model, a Gaussian Mixture model, solved via Expectation Maximization using as cues ITD and IPD.

Pros and cons of existing approaches

Essential bibliography

Haykin, Simon, and Zhe Chen. "The cocktail party problem." Neural computation 17.9 (2005): 1875-1902 [3]

Deleforge, Antoine, and Radu Horaud. "The cocktail party robot: Sound source separation and localisation with an active binaural head." Proceedings of the seventh annual ACM/IEEE international conference on Human-Robot Interaction. ACM, 2012. [4]

Antoine Deleforge, Radu Horaud, Yoav Y. Schechner, Laurent Girin. Co-Localization of Audio Sources in Images Using Binaural Features and Locally- Linear Regression. IEEE Transactions on Audio, Speech and Language Pro-cessing, Institute of Electrical and Electronics Engineers (IEEE), 2015, 23 (4), pp.718-731. [5]

Schwarz, Andreas, and Walter Kellermann. "Coherent-to-Diffuse Power Ratio Estimation for Dereverberation." Audio, Speech, and Language Processing, IEEE/ACM Transactions on 23.6 (2015): 1006-1018. [6] Willert, Volker, et al. "A probabilistic model for binaural sound localization." Systems, Man, and Cybernetics, Part B: Cybernetics, IEEE Transactions on 36.5 (2006): 982-994. [7]

Lu, Yan-Chen, and Martin Cooke. "Binaural estimation of sound source distance via the direct-to-reverberant energy ratio for static and moving sources." Audio, Speech, and Language Processing, IEEE Transactions on 18.7 (2010): 1793-1805. [8]

Deleforge, Antoine, and Radu Horaud. "Learning the direction of a sound source using head motions and spectral features." (2011): 29. [9]

Le Roux, Jonathan, and Emmanuel Vincent. "A categorization of robust speech processing datasets." (2014). [10]