Difference between revisions of "Talk:Graphical user interface for an autonomous wheelchair"

| Line 44: | Line 44: | ||

Structure of SelectionA: | Structure of SelectionA: | ||

* For each number of stimulation types you have a equal number of ids (e.g for Icon it will be used 1 id, for Row-Column 2 ids) | * For each number of stimulation types you have a equal number of ids (e.g for Icon it will be used 1 id, for Row-Column 2 ids) | ||

| + | |||

| + | It will be also an end asynchronous message, that brings the BCI in pause, and it closes the graphic interface. | ||

| + | |||

| + | If the syncrony will be lost there's a Reset Message that restarts from the calibration. It will be activated when the number of the classifications is different from the number of the stimulations on the screen | ||

Revision as of 09:24, 30 May 2008

Contents

General

The interface is used mainly to drive the Lurch wheelchair. It has different screens, corresponding to the different rooms or different environments where the wheelchair can move. The screens are organized hierarchically: a main screen permits to choose whether to drive the wheelchair or perform other tasks. The screens used for driving the wheelchair show a map of the environment, with different levels of details; e.g., a map might show just the rooms of a house, and then another map might show the living room with the furniture and ‘interesting’ positions (like near the table, in front of the TV, beside the window...).

The interface should be as accessible as possible. It can be driven by a BCI, used with the touch screen or with the keyboard. The BCI is based on the P300 and ErrP potentials; so the interface should highlight the possible choices on at a time (in orthogonal groups if the choices are numerous), and show the choice detected by the BCI for ErrP-based confirmation.

Maybe the screen should be updated while the wheelchair navigates; e.g., when the wheelchair enter into the kitchen, the GUI shows the map of the kitchen.

To Do

- Feasibility check: wxWidgets and OpenMaia. Check if using OpenMaia with wxWidgets is okay for the stimulation synchronization. The timing difference between the highlighting of a choice and the switching of the synchronization square should be no more than the duration of a screen frame (about 16 ms for a 60 Hz screen).

- Writing of a communication protocol between the BCI2000 developed in the project Online P300 and ErrP recognition with BCI2000. To be done together with Andrea Sgarlata.

- Development of a storage system to store information about the layout and the action of the interfaces; an extension of the XML format used by OpenMaia to describe keyboards should be possible.

Communication Protocol Requirements

- Cross-platform (at least Linux and Windows)

- Based on the IP protocol

- Asynchronous (as much as possible), so as to not block remote processes

- Preferably, the protocol should in ASCII, with fixed-width fields (the number of fields is variable, by necessity).

To Do

- List of the pieces of information to be transferred between the application and the GUI

Communication Protocol

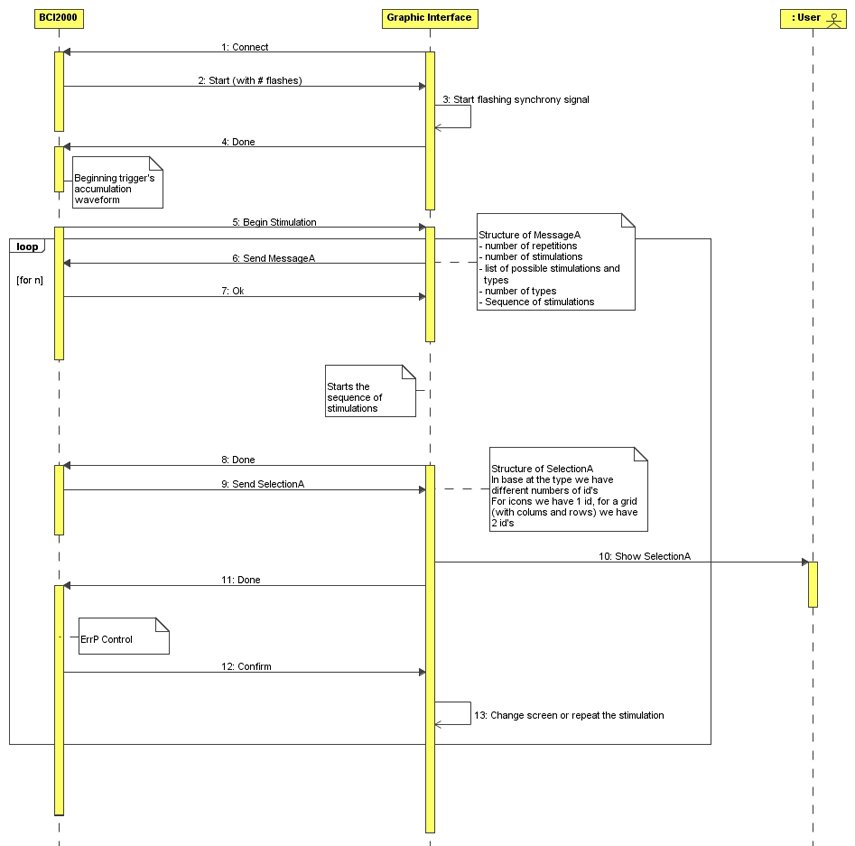

UML Sequence Diagram

Diagram

Comments about the diagram

Structure of MessageA:

- Number of repetitions: number of flashings

- Number of stimulations: number of flashings in one repetition

- List of the stimulations: list of the possible stimulations with its type

- Number of types

- Stimulations sequence: it includes all the repetitions

Each stimulation must hav associated a type (e.g Icon, Row-Column)

Structure of SelectionA:

- For each number of stimulation types you have a equal number of ids (e.g for Icon it will be used 1 id, for Row-Column 2 ids)

It will be also an end asynchronous message, that brings the BCI in pause, and it closes the graphic interface.

If the syncrony will be lost there's a Reset Message that restarts from the calibration. It will be activated when the number of the classifications is different from the number of the stimulations on the screen