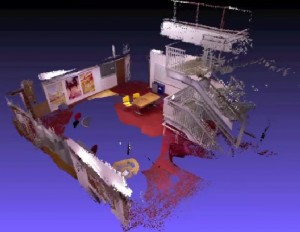

Poit cloud SLAM with Microsoft Kinect

From AIRWiki

Revision as of 16:23, 16 April 2012 by MatteoMatteucci (Talk | contribs) (Created page with "{{ProjectProposal |title=Poit cloud SLAM with Microsoft Kinect |image=PointCloudKinect.jpg |description=Simultaneous Localization and Mapping (SLAM) is one of the basic functi...")

| Title: | Poit cloud SLAM with Microsoft Kinect |

Image:PointCloudKinect.jpg |

| Description: | Simultaneous Localization and Mapping (SLAM) is one of the basic functionalities required from an autonomous robot. In the past we have developed a framework for building SLAM algorithm based on the use of the Extended Kalman Filter and vision sensors. A recently available vision sensor which has tremendous potential for autonomous robots is the Microsoft Kinect RGB-D sensor. The thesis aims at the integration of the Kinect sensor in the framework developed for the development of a point cloud base system for SLAM.

Material:

Expected outcome:

Required skills or skills to be acquired:

| |

| Tutor: | MatteoMatteucci (matteo.matteucci@polimi.it) | |

| Start: | 2012/04/01 | |

| Students: | 1 - 2 | |

| CFU: | 10 - 20 | |

| Research Area: | Computer Vision and Image Analysis | |

| Research Topic: | none | |

| Level: | Bs, Ms | |

| Type: | Thesis | |

| Status: | Active |