Inverse scaling parametrization for Monocular Simultaneous Localization and Mapping

Part 1: project profile

Project name

Inverse scaling parametrization for Monocular Simultaneous Localization and Mapping.

Project short description

This project regards the C++ implementation of a novel parametrization for solving the monocular SLAM problem. Please refer to the paper "Monocular SLAM with Inverse Scaling Parametrization" (D. Marzorati, M. Matteucci, D. Migliore, D. G. Sorrenti) for more details.

Dates

Start date: 2008/08/06

End date:

Internet site(s)

People involved

Project head(s)

Other Politecnico di Milano people

Students currently working on the project

Laboratory work and risk analysis

This project does not include laboratory activities.

Part 2: project description

One of the most relevant problems encountered so far in the field of mobile robotics is constituted by the difficulty of having a reliable navigation system. The kinks are due to the impossibility of building a detailed map of every environment a robot might be located in, and to the inherent inaccuracies of both the sensing and motion systems. The problem, faced with so called Simultaneous Localization And Mapping algorithms, after more than ten years of research can now be classified as solved, despite several issues remains open. In this project a novel approach for monocular (i.e. using a single camera and no other sensors) SLAM is implemented and tested on both simulated and real data. The final goal is to have a system working in real time on indoor/outdoor images with limited computational resources. Up to now the part working on simulated data is completed, while the part working on real data requires a novel implementation of the feature-matching algorithms to be fully functional.

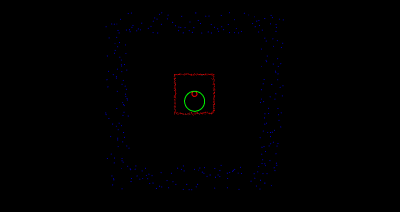

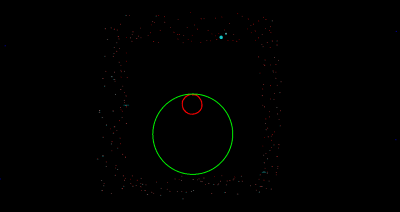

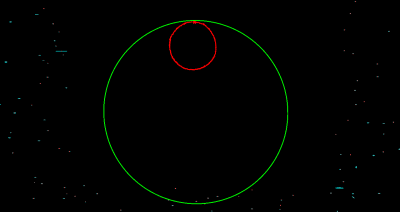

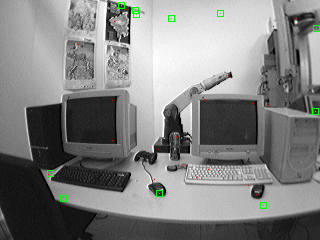

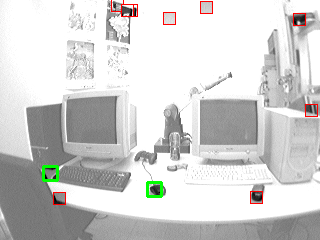

Below some screenshots resulting from the application of the algorithm to both simulated and real data are reported. In the simulated data images, blue dots are artificially generated features, red ones are the corresponding estimated features in the map (in cyan the corresponding uncertainty). The green line represents the trajectory followed by the robot, while the red one is the estimated trajectory. In real data images the red dots are the features extracted from the current scene, while the green dots are those predicted from the current state (the green square has no technical meaning, it's used only as a visual help for point localization in the image). Blue squares represents patches that are matched with the green ones, and red ones are predicted patches (those surrounded in thick green are matched ones).

Simulated Data

Real Data