Development an Artificial Intelligence System solving the MS Pac-Man videogame

Contents

Part 1: project profile

Project name

Development an Artificial Intelligence System solving the videogame MS Pac-Man

Project short description

The project is about the use of the neuro evolutionary algorithm NEAT in order to create one complex neural net able to solve the MS PacMan game. The algorithm is apply and modify in the way to accelerate the learning process an reach our goals, so we have to find a Neural Net explore the maze, escape from the ghosts, eat all the pill in the maze and finally maximise the score.

Dates

Start date: 2008/01/07

End date: 2008/09/30

Website(s)

http://cswww.essex.ac.uk/staff/sml/pacman/PacManContest.html

People involved

Project head(s)

P.L.Lanzi - User:PierLucaLanzi

D.Loiacono - User:DanieleLoiacono

Other Politecnico di Milano people

Students currently working on the project

Sandro Baranzini - User:SandroBaranzini

Students who worked on the project in the past

External personnel:

Laboratory work and risk analysis

Part 2: project description

[Temporary Version]

>>> INTRODUCTION

This thesis borns from the challenge presented in the WCCI (Wolrd Congress on Computational Intelligence) 2008 placed in Hong Kong in June 2008, in particular from the MSPacMan Competition. PacMan is a Namco arcade game, born in the 1980, early became most famous and loved by the worldwide audience. The main difference between the original PacMan and the Competition's version MSPacMan is the non deterministic character of Ghost’s movement that bring the MSPacMan version more interesting in the Artificial Intelligence science than the original one. So this Thesis work is to find an AI formalism able to play the MSPacMan, controlling the agent in order to escape from Ghosts, living and score as much as he can. The main difficulties are about the non deterministic character of the Ghosts, the complexity of the maze, presenting during the level in three stages (see next figure), and the long time simulation needed by whatever Machine Learning algorithm.

Initially we decide to using the neuro evolutionary algorithm calling NEAT created by Kenneth O. Stanley and implemented in C++ in its first version. Many other version was born soon, in particular ANJI, the version we use, a good implemented and documented version written in Java. The main idea of this Algorithm is to merge the benefits of the Neural Nets, with Genetic Algorithm’s great evolutional feature. Through epoch Generations, Neural Nets Populations evolve, thanks to Crossover, Mutation and Speciations, guided by a Fitness function that measure the single Neural Net goodness for our goal.

>>> SOFTWARE AVAILABLE

We have three software system have to integrated themselves and cooperate during long time Simulation. So we got the game MSPacMan, the Microsoft version included in the “Return Of Arcade – Anniversary Edition“ pack, the competition’s software that capture the screen image, elaborated it pixel for pixel and reassume all the information in a java Class creating the game state; it was necessary modified it in order to “insert coin”, and “start game” automatically, respectively pressing the F2 and F3 key. Finally we have the ANJI source code that we had integrated with competition’s software in the way each Neural Net can play the game, allowing us to measure its fitness.

>>> EARLY ATTEMPTS

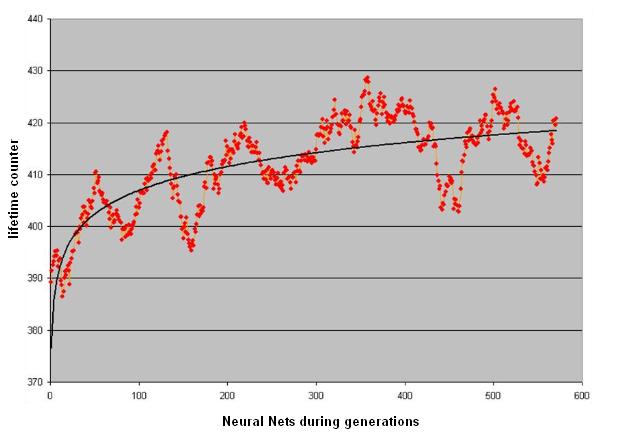

First of all we have to choose the inputs of the Nets; we have many variables in our problem every stored in the Game State Class. So we start the simulations assumed that the MSPAcMan is a classical Predator-Prey problem. So we choose, like problem inputs, the position of the agent, the positions of ghosts, the wall of the maze in all of four cardinal point centered in the agent, the last four inputs are the direction of ghosts. In these early simulations we measure the Fitness like agent’s lifetime counter.

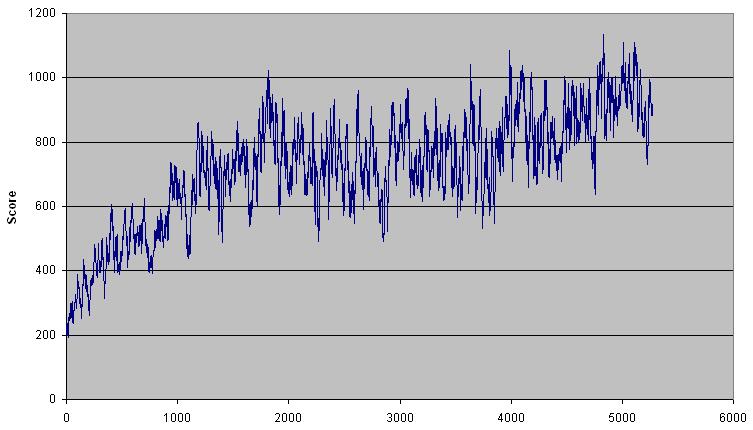

Results of these early attempts was no satisfactory, see the figure above with the graphical representation of moving average of fitness during 40 generations, and shows us that the net needs more generations to evolve and the information about the closest pill by the PacMan in order to give agent the capacity to explore the maze in the direction of remaining pills. So we did, and doing again today, other simulation with less input, a local representation of the agent, of ghosts and of the closest pill; in addition we measure the Fitness like the game score. We get better results, you can see in next figure that the process of learning able to increase the score about 1000 points, that confirm us that’s the right direction to solve the problem ….