Emergent Semantics in Wikipedia

Emergent Semantics in Wikipedia

| |

| Short Description: | Represent Wikipedia's pages as a graph with connections' weight realized by means of a "social similarity" metrics and detect an emergent semantics in it. |

| Coordinator: | MarcoColombetti (colombet@elet.polimi.it) |

| Tutor: | DavidLaniado (david.laniado@gmail.com), RiccardoTasso (tasso@elet.polimi.it) |

| Collaborator: | |

| Students: | FabioColzada (anywhere88@hotmail.it), MattiaDiVitto (mattiadivitto@gmail.com) |

| Research Area: | Social Software and Semantic Web |

| Research Topic: | Graph Mining and Analysis, Semantic Tagging |

| Start: | 2011/02/20 |

| End: | 2011/07/16 |

| Status: | Closed |

| Level: | Bs |

| Type: | Thesis |

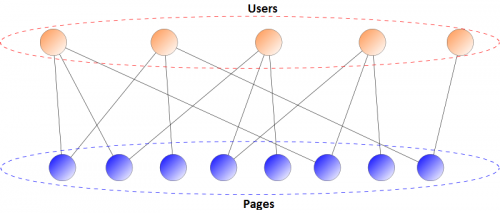

The aim of this project was to develop a social-measure for the similarity between two Wikipedia's pages, according to the quality and the quantity of the contributions generated by shared users. By building a bipartite network of pages and users it is possible to obtain from it a one-mode network, where the nodes are the pages itself.

Contents

Build a network of pages

The network used in our analysis comes from the interaction between the users and the encyclopedia, in such a way the object of our analysis is dynamically created in a distributed system of contributions. We adopted the EditLongevity measure to estimate the quality (and the quantity) of an edit on a page generated by a certain user, this metric is directly used to weight the edges in the first bipartite network created between users and pages, where two pages are connected by the edits of a shared contributor.

To obtain a one-mode network from a two-mode network the cosine similarity measure has been chosen to calculate the correct weight of a direct edge between two pages. In this way not only the relative weight of the two user-page connections is considered, but also a comparison with the size of these pages.

Community detection in the Wikipedia graph

By applying to the network an algorithm for fast community detection in a graph, we got groups of Wikipedia's articles. Actually we analyzed the results obtained by two different algorithm, both based on modularity optimization: the Fastgreedy algorithm (with a workaround to solve the resolution limit issue) and the Louvain method. Despite the similar results, we lead the final analysis on the set of data obtained by the Louvain method, considered more reliable than the Fastgreedy algorithm.

Results

To both restrict the dataset and keep the most meaningful information we set up many thresholds during the process. This lead to a graph with many small groups of pages (often containing just 2 or 3 articles) and two main components: one containing only pages about geography, and the biggest one, more interesting for our purpose, containing elements from various semantic areas. After the community detection we lead a further analysis on the internal semantics of the biggest communities of pages. Taking the structure of Wikipedia's categories, and forcing its representation as a tree, we calculated how strongly a cluster is binded to a limited set of related-meaning categories. We stated that almost all of the analyzed groups have elements belonging to the same semantic area, in such a way it is reasonable to think it is possible to extract an emergent semantics from such a network. Particulary interesting is the presence of a single very heterogeneous and big group of pages, not covered by any restricted set of categories. Some hypothesis have been made about this groups, underlining that the elements of this community are usually big pages often considered of general interest.