Mu and beta rhythm-based BCI

Part 1: project profile

Project name

Control of a two-dimensional movement signal by a noninvasive BCI

Project short description

The aim of this project is to design and develop a Brain-Computer Interface in order to control a two-dimensional movement signal, as done at the Wadsworth Center (Wolpaw and McFarland, 2004). A non-invasive approach will be adopted, using EEG as a signal source and Mu and Beta rhythms over sensorimotor cortex as information carriers.

Dates

Start date: 2008/04/01

End date:

People involved

Project head(s)

Other Politecnico di Milano people

Students currently working on the project

Laboratory work and risk analysis

Laboratory work for this project will be mainly performed at AIRLab/Lambrate. The main laboratory activity will be the EEG data acquisition. Though apparently safe, the contact between the subject's body and the EEG instrumentation makes this activity potentially harmful. For safety's sake any electronic device connected to the system should be isolated from the power line. In addition to this, more general risks are present in the laboratory. Standard safety measures described in Safety norms will be followed.

Part 2: project description

The main purpose of this project is to implement the BCI algorithms used at the Wadsworth Center and proposed by J.R.Wolpaw and D.J.McFarland in their 2004 paper. In that experiment, subjects were able to control the position of a cursor on the screen by appropriately modifying the amplitude of their Mu and Beta rhythms.

The Wadsworth BCI

The study protocol

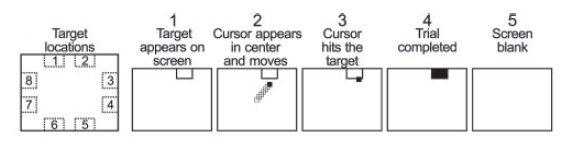

While the subject sat in front of the screen, EEG data from 64 electrodes on the scalp were recorded, bandpass filtered (0.1Hz - 60Hz) and digitized at 160Hz. At the beginning of a trial, a target appeared at one of eight possible locations along the edges of the screen, in a block-randomized fashion. One second later, the cursor appeared in the center of the screen and began to move around according to the EEG activity. The movement lasted for 10 seconds (or less if the cursor reached the target before), then there was one second of blank screen and another trial began.

A session is composed of eight 3-minutes runs, separated by 1 minute breaks.

Control of cursor movement

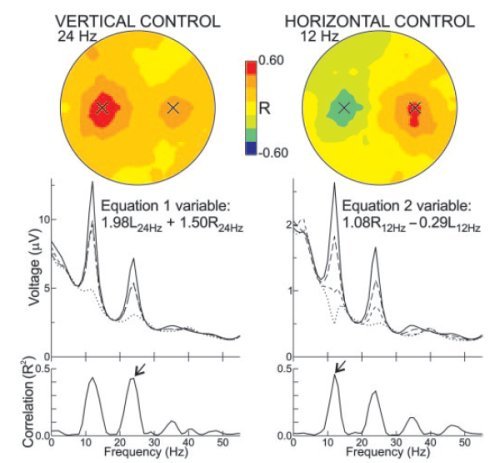

The position of the cursor was updated every 50ms. The movement amount depended from Mu and Beta amplitudes in the right and left emispheres (the selection of precise Mu and Beta bands was based on previous experiments on the subjects).

Last 400ms of signal data from electrodes C3 and C4 went through autoregressive frequency analysis to determine the spectral features; movement in vertical and horizontal directions was calculated as:

Mv = av (wrv*Rv + wlv*Lv + bv)

Mh = ah (wrh*Rh + wlh*Lh + bh)

where Rv, Lv, Rh, Lh are right and left Mu and Beta amplitudes, wij are weight factors adaptively selected (see below), and av, bv, ah, bh are normalizing factors.

Adaptation algorithm

Initially, the weights in the linear equations above were set as:

wrv = wlv = 1

wrh = 1

wlh = -1

so that the vertical movement was controlled by the sum of right and left spectral amplitudes in one of the two bands, while the horizontal one was controlled by the difference between right and left amplitudes in the other.

The weights were adapted automatically at each trial in order to optimize the transformation of EEG signals in cursor movement signals. First of all, each target was expressed as a couple of values (or coordinates) representing one of the four horizontal or vertical possible positions. Then, at each trial, Least-Mean-Square algorithm was used to minimize for past trials the difference between the actual target position and the position predicted by the two linear equations.

Implementation

BCI2000

This project's Brain-Computer Interface is realized with BCI2000. BCI2000 is a multi-purpose EEG software toolbox, developed by the Wadsworth Center and the University of Tubingen, designed to facilitate research and application development in the BCI area.

(More detailed information about BCI2000 can be found at the application website or at the BCI2000 wiki).

BCI2000 is structured in blocks called modules: the Source, Filtering and Application modules are independent on each other and allow easy modification and insertion of new code.

In our case, we need to write a new filter. The filtering section of a BCI2000-developed interface is represented by a filter chain: the input signal from the Source module passes through a series of filters in order to be translated in a control signal. For example, a simplified Wadsworth BCI could have a spatial (laplacian) filter, an autoregressive filter for frequency analysis, a linear classifier and a normalizer.

In such a situation, all we need is a filter between the autoregressive filter and the linear classifier, applying an iterative version of the LMS algorithm on the classifier weights and updating them at the end of each trial.

Delta Rule Adapter

The new filter is called DeltaRuleAdapter. It implements the Delta Rule, a gradient descent learning rule, in order to minimize the error.

The filter takes the current weights from the classifier at the beginning of each trial, and then updates them iteratively in his buffer according to the Delta Rule along the trial duration. At the end of the trial, it updates the classifier's weights. There are currently two different types of adaptation.

The first is based on positional error at each sample block. At each iteration, the new predicted position is computed, and the error is the distance from the target position:

// compute new position

predicted[0] *= Parameter("NormalizerGains")(0);

predicted[1] *= Parameter("NormalizerGains")(1);

pos[0] += predicted[0] * 0.0125;

pos[1] += predicted[1] * 0.0125;

//compute x and y error

err[0]= xTarget - pos[0];

err[1]= yTarget - pos[1];

where "predicted" represents the output of the linear classifier with the current weights. This kind of adaptation is more intuitive, but since the cursor starts each trial at the center of the screen, large errors are always present, leading to unnecessary weights adaptations. The filter offers another possibility: adaptation on directional error

// compute movement direction (y=mx+q)

m = predicted[1] / predicted[0];

q = pos[1] - m*pos[0];

// compute the coordinates of the intersection between the

// movement direction and the edges of the screen

if (m>=0) {

if (predicted[0]>0) {

fpos[0] = min(1.0, (1.0-q)/m);

fpos[1] = min(1.0, m+q);

}

if (predicted[0]<0) {

fpos[0] = max(-1.0, (-1.0-q)/m);

fpos[1] = max(-1.0, -1.0+q);

}

}

if (m<0) {

if (predicted[0]>0) {

//...

}

if (predicted[0]<0) {

//...

}

}

// compute x and y error

err[0] = xTarget - fpos[0];

err[1] = yTarget - fpos[1];

Laboratory activity

(coming soon)