Talk:Deep Learning on Event-Based Cameras

| Title: | Deep Learning on Event-Based Cameras | |

|---|---|---|

| Description: | This project aims to study deep learning techniques on event-based cameras and develop algorithms to perform object recognition on those devices. | |

| Tutor: | MatteoMatteucci | |

| Start: | April 2017 | |

| Number of students: | 1 | |

| CFU: | 20 |

Contents

Introduction

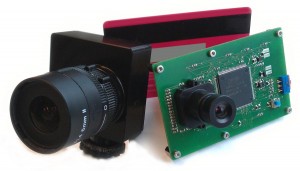

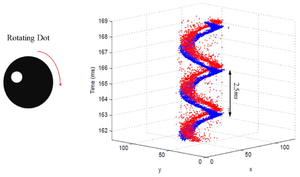

Standard camera devices suffer from limitation in performances imposed by their principles of functioning. They acquire visual information by taking snapshots of the entire scene at a fixed rate. This introduces a lot of redundancy in data, in fact most of the time only a small part of the scene changes from the previous frame, and limits the speed at which data can be sampled, potentially missing relevant information. Biologically inspired event-based cameras, instead, are driven by events happening inside the scene without any notion of a frame. Each pixel of the sensor emits, independently from the other pixels, an event (spike) every time it detects that something has changed inside its field of view (change of brightness or contrast). Each event is a tuple (x,y,p,t) describing the coordinates (x,y) of the pixel from which the event has been generated, the polarity of the event p (if the event refers to an increasing or decreasing in intensity) and the timestamp t of creation. The output of the sensor is therefore a continuous flow of events describing the scene, with a small delay in time with respect to the instant in which the real events happened. Systems that are able to process directly the stream of events can take advantage of the their low-latency and produce decisions as soon as enough relevant information has been collected. The low latency of event-based cameras, their small dimensions and their power consumption make this type of sensor suitable for a lot of applications including Robotics.

State of the art

In recent years, there has been a growing interest in event-based vision and dynamic vision sensors (DVS) due to their advantages and the particular type of data representation they provide. In particular, because of the spiking nature of the data, research has focused on their application with biologically inspired systems. An example is the case of Spiking Neural Networks, in which one of the goals is to mimic how the visual information is processed in visual cortex, that are well suited for this type of sensors because of their ability to learn from spiking stimuli. Good results have also been obtained with recurrent architectures, such as LSTM models, which are able to learn spatio-temporal structures from sequences of information.

Spiking Neural Networks

A spiking neural networks (SNN) is a biologically inspired model that considers temporal information related to the incoming spikes. The basic model of a neuron of this kind is the Leaky-Integrate and Fire neuron (LIF) that can be represented as a state xj which can be modified based on the received stimuli. Every time a new spike arrives, the state xj is incremented or decremented based on the corresponding weight wj. When the state of the neuron reaches one of the two (negative and positive) thresholds +/- xth the neuron generates an output spike and reset to its resting value xrest. When the neuron fires it is deactivated for a certain amount of time, called refactory time in which it cannot generate outputs; this state can also be imposed by lateral connection with nearby neurons. The state is also affected by a constant leak that increments or decrements the neuron’s state towards its resting value.

The main drawback of this type of networks is the fact that the model is not easily differentiable, so backpropagation methods cannot be applied. One solution to overcome this issue is to train a frame-based model (with frames obtained by integrating events occurred in small temporal windows of some milliseconds) and then convert the obtained weights by means of ad-hoc rules. This approach has been used by Pérez-Carrasco et al. that proposed a method to convert a trained frame-based ConvNet into an event-based one. A similar approach has been also adopted by O’Connor et al. by using a Deep Belief network for classification. A completely different approach is the one of J. Lee at al. that uses a differentiable approximation of the model on which backpropagation can be applied. Finally, learning on spiking neural networks can be also performed by using biologically inspired rules of updating synaptic weights that make explicit use of the timing of the spikes, like for instance the STDP (Spike-timing dependent plasticity) learning rule that updates the strength of each synapsis based on the delay between pre-synaptic and post-synaptic spikes.

Most of the proposed solutions for the object recognition problem with spiking neural networks (B. Zhao et al., G O.rchard et al., T. Masquelier et al.) make use of the HMAX hierarchical model, a biologically plausible model of the computation in the primary visual cortex , and Gabor filters, which are a good approximation of the responses of simple cells in cortex. The main differences of these models is the way in which the features from the S2 layer are learned during training.

Recurrent Neural Networks

Another well-suited model to learn with this type of data are the recurrent neural network architectures, because of their ability to maintain an internal state and create temporal relations between sequences of inputs. In particular, a model that excels in the task of remembering values for either long or short durations of time is the Long Short-term Memory network (LSTM). Good results have been obtained by D. Neil et al. in their work of Phased LSTM, a modification of the classical LSTM cell that can learn from sequences of inputs gathered at irregular time instants. The modification consists of the introduction of a time gate kt which regulates the inputs of the cell’s state ct and the output ht. The opening of this gate is regulated by an oscillation whose parameters (period, rate ron of the open phase with respect to the period, and the shift s) are learned during training.

Tools and Datasets

The following are some of the available neuromorphic datasets ad tools.

Datasets:

- N-MNIST and N-Caltech101

- MNIST-DVS

- jAER data (unannotated)

- DAVIS 240C dataset

Tools:

- Event-Camera Simulator - simulator based on Blender

- jAER - tool for AER data processing and visualization

References

- Orchard, G.; Cohen, G.; Jayawant, A.; and Thakor, N. “Converting Static Image Datasets to Spiking Neuromorphic Datasets Using Saccades", Frontiers in Neuroscience, vol.9, no.437, Oct. 2015 [1]

- Pérez-Carrasco,J.A.,Zhao,B.,Serrano,C.,Acha,B.,Serrano-Gotarredona,T., Chen,S.,et al.(2013). "Mapping from frame-driven to frame-free event-driven vision systems by low-rate coding and coincidence processing" [2]

- Maximilian Riesenhuber and Tomaso Poggio "Hierarchical models of object recognition in cortex" [3]

- J.H Lee, T. Delbrück and M. Pfeiffer. "Training deep spiking neural networks using backpropagation" [4]

- B Zhao, R Ding, S Chen. "Feedforward categorization on AER motion events using cortex-like features in a spiking neural network" [5]

- Garrick Orchard, Cedric Meyer, Ralph Etienne-Cummings, Christoph Posch, Nitish Thakor, Ryad Benosman. "HFirst: A Temporal Approach to Object Recognition" [6]

- Timothée Masquelier, Simon J. Thorpe. "Unsupervised Learning of Visual Features through Spike Timing Dependent Plasticity" [7]

- Peter O’Connor , Daniel Neil , Shih-Chii Liu , Tobi Delbruck and Michael Pfeiffer . "Real-time classification and sensor fusion with a spiking deep belief network" [8]

- Daniel Neil, Michael Pfeiffer, and Shih-Chii Liu. "Phased LSTM: Accelerating Recurrent Network Training for Long or Event-based Sequences" [9]