Talk:Deep Learning on Event-Based Cameras

| Title: | Deep Learning on Event-Based Cameras | [[Image:|center|300px]] |

|---|---|---|

| Description: | This project aims to study deep learning techniques on event-based cameras and develop algorithms to perform object recognition on those devices. | |

| Tutor: | MatteoMatteucci | |

| Start: | April 2017 | |

| Number of students: | 1 | |

| CFU: | 20 |

Contents

Introduction

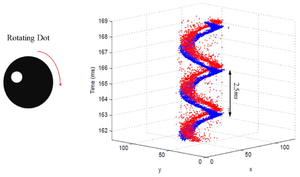

Standard camera devices suffer from limitation in performances imposed by their principles of functioning. They acquire visual information by taking snapshots of the entire scene at a fixed rate. This introduces a lot of redundancy in data, in fact most of the time only a small part of the scene has changed from the previous frame, and limits the speed at which data can be sampled, potentially missing relevant information. Biologically inspired event-based cameras, instead, are driven by events happening inside the scene without any notion of a frame. Each pixel of the sensor emits, independently from the other pixels, an event (spike) every time it detects that something has changed inside its field of view (change of brightness or contrast). Each event is a tuple (x,y,p,t) describing the coordinates (x,y) of the pixel from which the event has been generated, the polarity of the event p (if the event refers to an increasing or decreasing in intensity) and the timestamp t of creation. The output of the sensor is therefore a continuous flow of events describing the scene, with a small delay in time with respect to the instant in which the real events happened. Systems that are able to process directly the stream of events can take advantage of the their low-latency and produce decisions as soon as enough relevant information has been collected. The low latency of event-based cameras, their small dimensions, the fact that they don’t require cooling, make this type of sensor suitable for a lot of applications including Robotics.